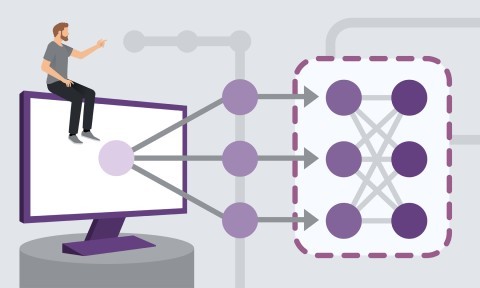

Attention-based models allow neural networks to focus on the most important features of the input, thus producing better results at the output. In this course, Janani Ravi

explains how recurrent neural networks work and builds and trains two image captioning models one without attention and another using attention models and compares their results. If you have some experience and understanding of how neural networks work and want to see what attention-based models can do for you, check out this course.